- Paper Accepted to ICLR 2025, the World’s Most Prestigious AI Conference

- Expected to Contribute to the Democratization of AI by Enhancing Accessibility to High-Performance Models

▲ Professor U Kang, Department of Computer Science and Engineering, Seoul National University

Seoul National University College of Engineering announced that Professor U Kang from the Department of Computer Science and Engineering has developed an innovative artificial intelligence (AI) technology that allows for the compression of deep learning models without a significant drop in performance, even in situations where training data cannot be used due to privacy or security concerns.

The research paper has been accepted for presentation at the 13th International Conference on Learning Representations (ICLR 2025), which will be held in Singapore for five days starting April 24. ICLR is widely regarded as the most prestigious academic conference in the field of machine learning and deep learning.

One of the major challenges in real-world deep learning training is the limited access to data due to privacy protection or security issues. To address this, a technique called Zero-shot Quantization (ZSQ) has been developed, which enables model quantization without the need for training data.

However, existing ZSQ technologies have had critical limitations, including performance degradation caused by noisy synthetic data, inaccurate predictions based on flawed features, and errors from hard labels assigned to difficult samples.

To overcome these issues, Professor Kang’s team proposed a new ZSQ technique called “SynQ” (Synthesis-aware Fine-tuning for Zero-shot Quantization), which maintains the performance of deep learning models while effectively compressing them, all without using training data. SynQ is evaluated as a significant advancement because it can be easily applied to existing ZSQ techniques in data-scarce environments.

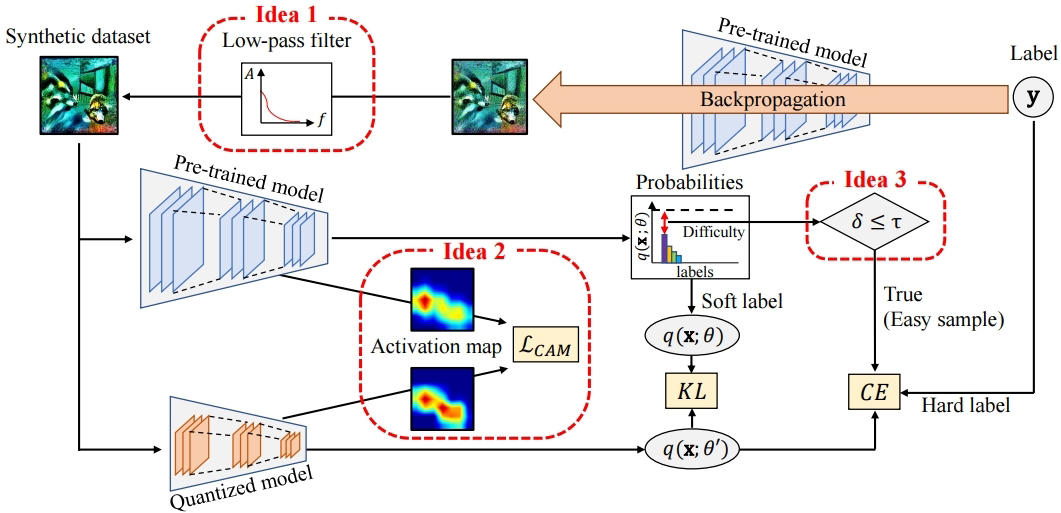

The research team enhanced model performance through three core techniques, overcoming the limitations of traditional ZSQ. First, they applied a low-pass filter to remove high-frequency noise from synthetic data. Second, they aligned Class Activation Maps (CAM) between pre-trained and quantized models to increase prediction accuracy. Lastly, they addressed potential errors caused by hard labels for difficult samples by using soft labels (probabilistically represented answers) instead, thus preventing faulty learning.

In essence, SynQ improves the performance of quantized models by refining synthetic data from pre-trained models using a low-pass filter, aligning CAMs, and applying a difficulty-aware loss function—all without access to real-world training data.

Moving forward, the SynQ technique is expected to become a core technology in the AI industry, enabling the implementation of high-performance, lightweight deep learning models. In particular, since SynQ allows for accurate model compression without the need for training data, it could be widely applied in resource-constrained edge device environments such as smartphones, IoT devices, and autonomous vehicle sensors.

Professor Kang, the lead researcher, emphasized, “SynQ offers a major advantage in enabling model compression without compromising personal data privacy, thereby helping to mitigate security and privacy concerns.” He added, “This technique can also help small businesses and institutions that lack access to large datasets adopt high-performance AI models, which will accelerate the proliferation and democratization of AI technologies across various fields.”

▲ SynQ Key Concepts : Idea 1) Low-pass filtering to reduce noise, Idea 2) Class Activation Map (CAM) alignment, Idea 3) Use of soft labels for difficult samples

[Reference Material]

- Paper Title/Conference : SynQ: Accurate Zero-shot Quantization by Synthesis-aware Fine-tuning, ICLR 2025

- Paper Link : https://openreview.net/pdf?id=2rnOgyFQgb

[Contact Information]

Professor U Kang, Interdisciplinary Program in Artificial Intelligence & Department of Computer Science and Engineering, Seoul National University / +82-2-880-725 / ukang@snu.ac.kr